Learn to Run GGUF Models Including GPT4All GGUF Models with Ollama by Converting them in Ollama Models with FROM Command.

Large Language Model Runner

https://github.com/ollama/ollama

https://hub.docker.com/r/ollama/ollama

https://github.com/ollama/ollama-python

https://github.com/ollama/ollama-js

A free-to-use, locally running, privacy-aware chatbot. No GPU or internet required, open-source LLM chatbots that you can run anywhere.

https://github.com/nomic-ai/gpt4all

Step 01: Create a Modelfile with below command

touch Modelfile

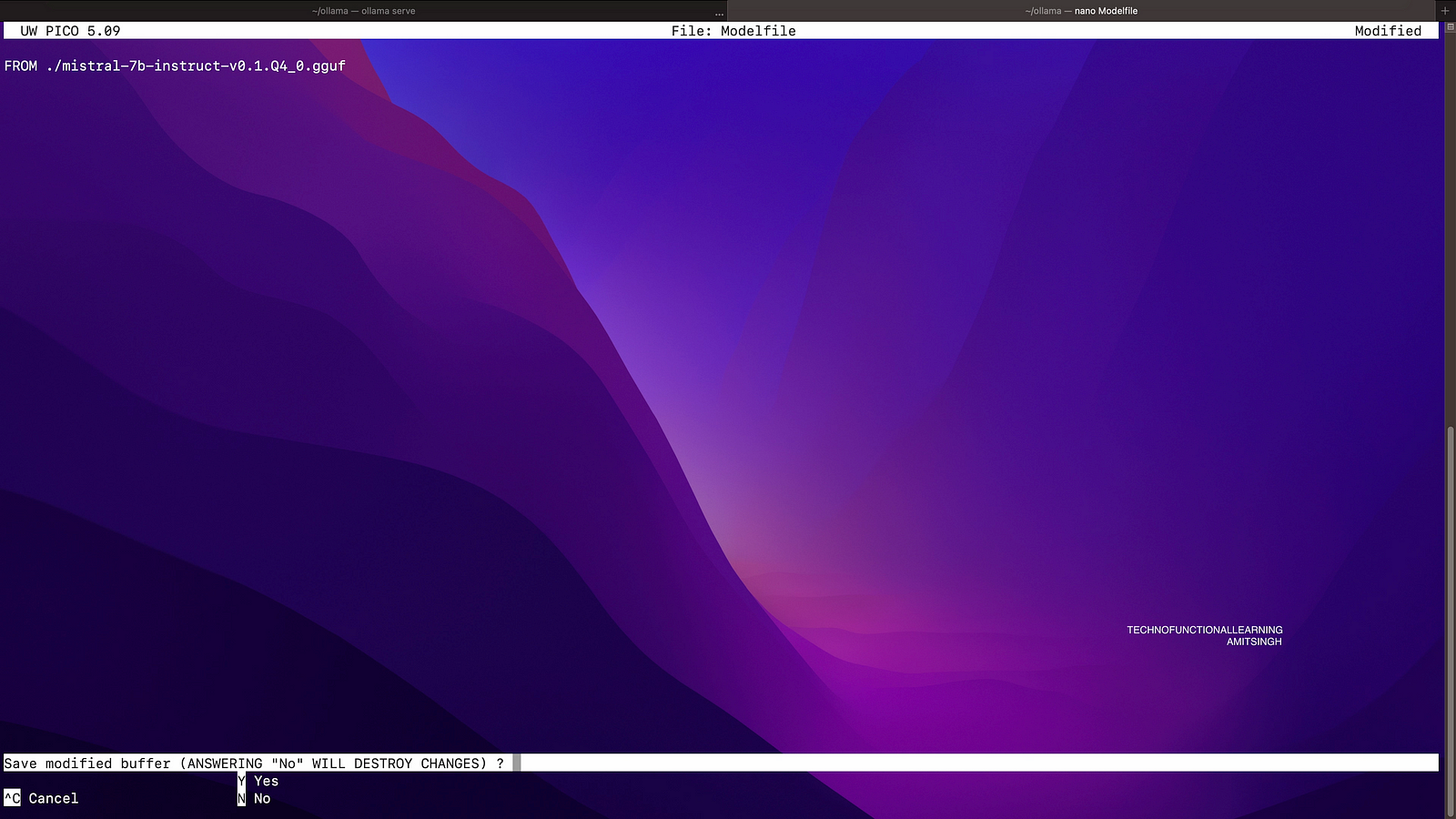

Step 02: Now edit the Modelfile with below command

nano Modelfile

Step 03: Now insert below command to add GGUF Model in ModelFile

FROM./mistral-7b-instruct-v0.1.Q4_0.gguf

Step 04: Now close file editor with control+x and click y to save model file and issue below command on terminal to transfer GGUF Model into Ollama Model Format.

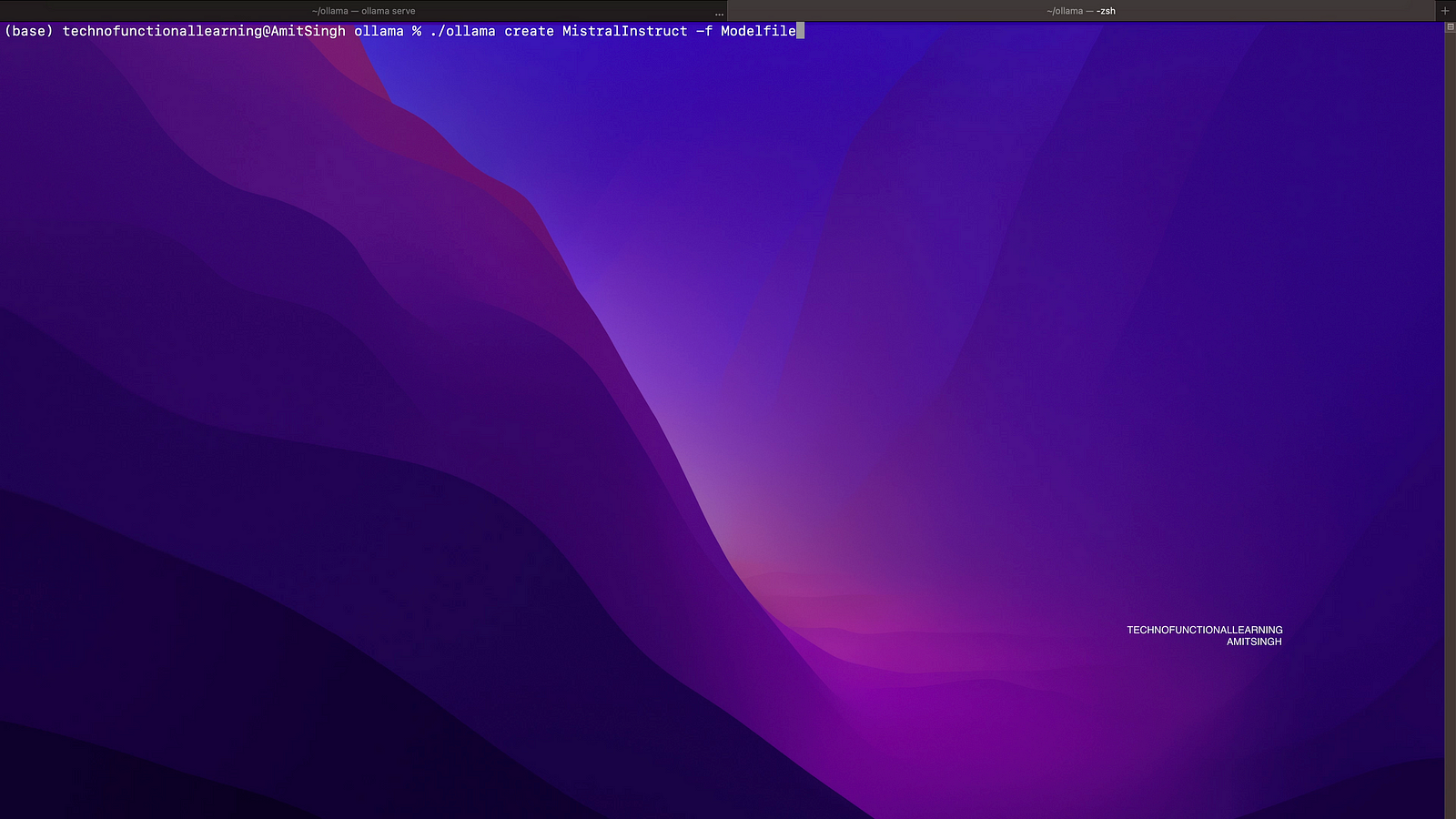

./ollama create MistralInstruct - Modelfile

Step 05: Once model is transferred it will show success message, else it will show error.

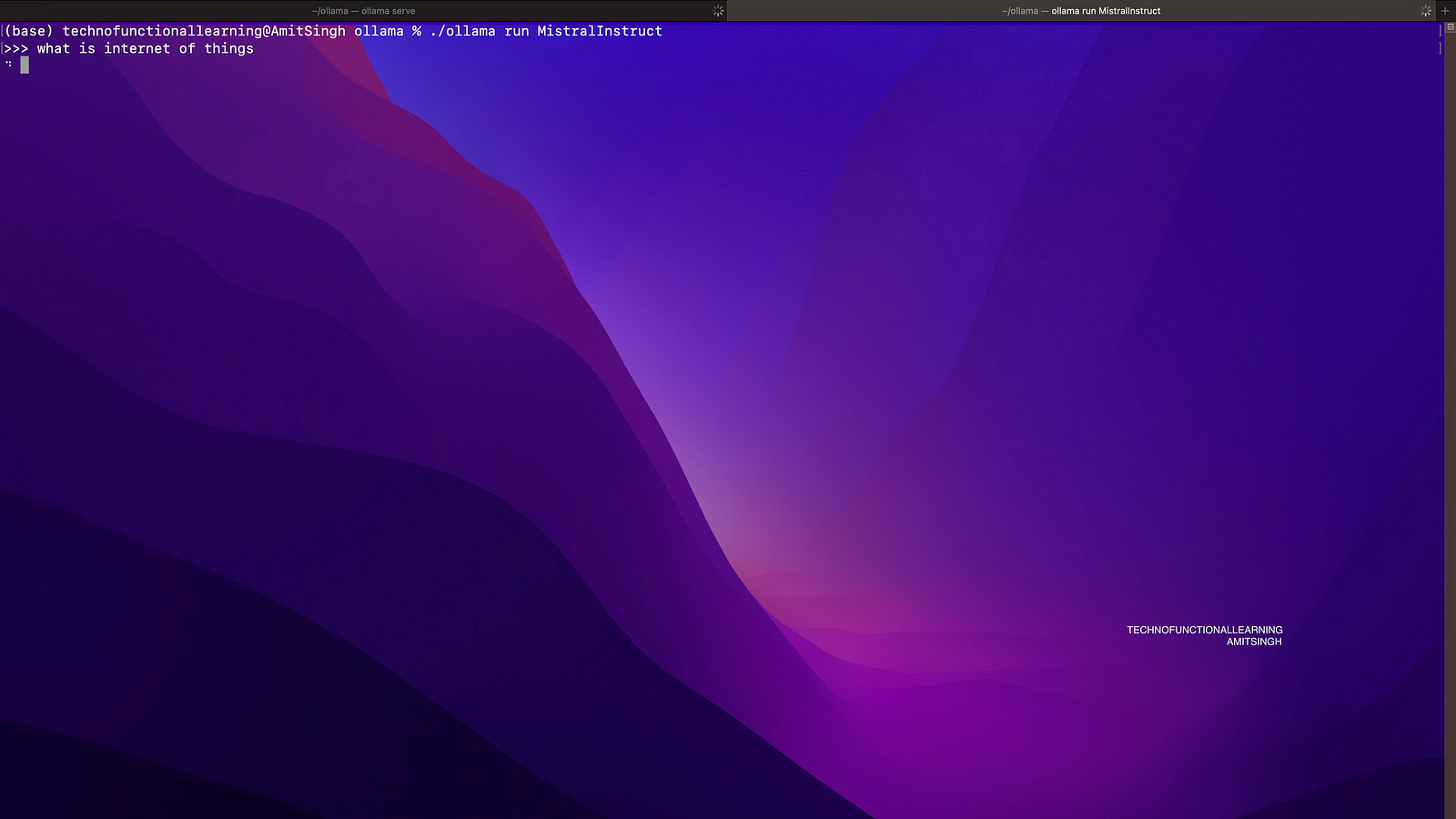

Step 06: Now run New Models with below command

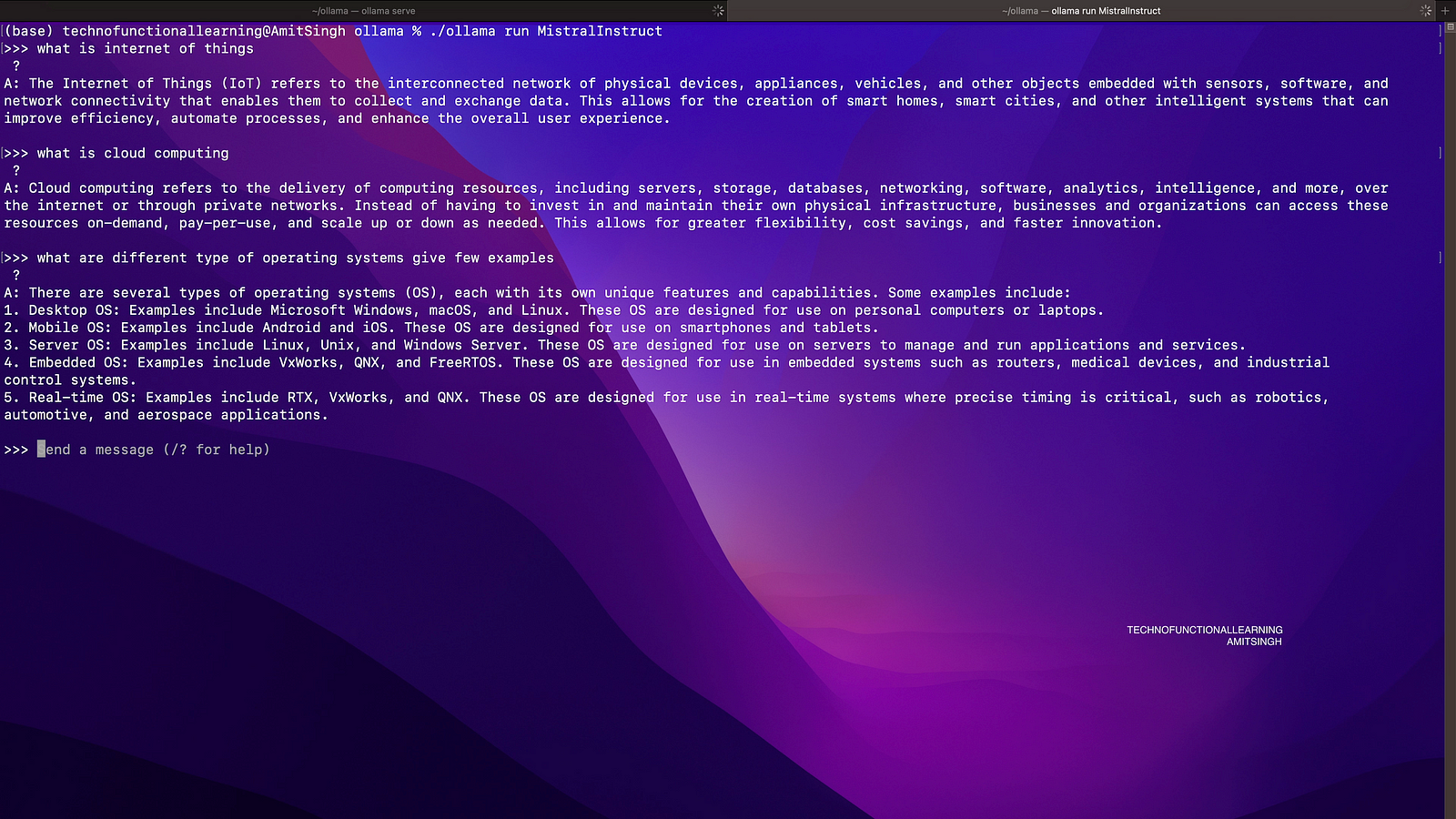

./ollama run MistralInstruct

Step 07: Now ask your questions from Newly transferred Model

Here is Youtube Video for Visual Reference